Suspect Development Systems: Databasing Marginality and Enforcing Discipline

Abstract

Algorithmic accountability law—focused on the regulation of data-driven systems like artificial intelligence (AI) or automated decision-making (ADM) tools—is the subject of lively policy debates, heated advocacy, and mainstream media attention. Concerns have moved beyond data protection and individual due process to encompass a broader range of group-level harms such as discrimination and modes of democratic participation. While a welcome and long overdue shift, the current discourse ignores systems like databases, which are viewed as technically “rudimentary” and often siloed from regulatory scrutiny and public attention. Additionally, burgeoning regulatory proposals like algorithmic impact assessments are not structured to surface important –yet often overlooked –social, organizational, and political economy contexts that are critical to evaluating the practical functions and outcomes of technological systems.

This Article presents a new categorical lens and analytical framework that aims to address and overcome these limitations. “Suspect Development Systems” (SDS) refers to: (1) information technologies used by government and private actors, (2) to manage vague or often immeasurable social risk based on presumed or real social conditions (e.g. violence, corruption, substance abuse), (3) that subject targeted individuals or groups to greater suspicion, differential treatment, and more punitive and exclusionary outcomes. This framework includes some of the most recent and egregious examples of data-driven tools (such as predictive policing or risk assessments), but critically, it is also inclusive of a broader range of database systems that are currently at the margins of technology policy discourse. By examining the use of various criminal intelligence databases in India, the United Kingdom, and the United States, we developed a framework of five categories of features (technical, legal, political economy, organizational, and social) that together and separately influence how these technologies function in practice, the ways they are used, and the outcomes they produce. We then apply this analytical framework to welfare system databases, universal or ID number databases, and citizenship databases to demonstrate the value of this framework in both identifying and evaluating emergent or under-examined technologies in other sensitive social domains.

Suspect Development Systems is an intervention in legal scholarship and practice, as it provides a much-needed definitional and analytical framework for understanding an ever-evolving ecosystem of technologies embedded and employed in modern governance. Our analysis also helps redirect attention toward important yet often under-examined contexts, conditions, and consequences that are pertinent to the development of meaningful legislative or regulatory interventions in the field of algorithmic accountability. The cross-jurisdictional evidence put forth across this Article illuminates the value of examining commonalities between the Global North and South to inform our understanding of how seemingly disparate technologies and contexts are in fact coaxial, which is the basis for building more global solidarity.

Introduction

In October 2020, a small group of protesters dressed in black and carrying black umbrellas to protect their privacy marched through downtown Phoenix, Arizona.1Kaila White, 18 Arrested During Protest Against Police in Downtown Phoenix on Saturday, Ariz. Republic (Oct. 18, 2020, 4:21PM), https://www.azcentral.com/story/news/local/phoenix-breaking/2020/10/18/downtown-phoenix-protest-police-arrest-nearly-every-attendee/3705969001/, [https://perma.cc/JK73-5R5B]; see Henry E. Hockeimer, Jr., Terence M. Grugan, Bradley M. Gershel & Jillian L. Andrews, Ballard Spahr, LLP, Report to the City, City Manager’s Office of an Investigation into the Criminal Street Gang Charges Filed Against “ACAB” 9–10 (2021) https://www.phoenix.gov/citymanagersite/Documents/Gang-Related-Summary-Report-08-12-2021.pdf [https://perma.cc/TB9A-5R2M]. This was one of many protests across the globe against police brutality and racism that continued into the fall of 2020 following the murder of George Floyd in May of that year.2White, supra note 1; see Hockeimer et al., supra note 1, at 20, 33. The demonstrators were followed by a group of police officers and subsequently arrested in the largest group arrest in Phoenix since June 2020 when the racial justice protests began.3White, supra note 1; see Hockeimer et al., supra note 1, at 20,33. The demonstrators were charged with several offenses, including unlawful assembly,4White, supra note 1; Hockeimer et al., supra note 1, at 4. and were surreptitiously added to the state gang database as members of a gang called “ACAB” which law enforcement officials designated as an “extremist” group with “violent tendencies.”5Dave Biscobing, ‘Prime for Abuse’: Lack of Oversight Lets Phoenix Police Add Protesters to

Gang Database, ABC 15 Ariz., (June 5, 2021, 3:08PM), https://www.abc15.com/news/local-news/investigations/protest-arrests/prime-for-abuse-lack-of-oversight-lets-phoenix-police-add-protesters-to-gang-database, [https://perma.cc/YZD9-2W8B]. But local news reports revealed that the ACAB gang was fictional.6. Id. Phoenix police and county prosecutors colluded to target and arrest the protestors, and invented a moniker based on the common protest chant “All Cops Are Bastards.”7. Id.; Hockeimer et al., supra note 1, at 3–4. This incident is one of a growing number of instances where government officials take advantage of nebulous databases to profile, target, and punish unfavorably viewed or politically marginalized individuals or groups.

For some databases, it is the mere fact of inclusion that places individuals and groups in a “suspect” category that can produce harmful results. For others, it is the omission from the database, which can often be deliberate, that places excluded individuals into a “suspect” category where they face punitive and harmful consequences. For example, digital ID databases, like India’s Aadhaar and Uganda’s Ndaga Muntu, are used by multiple facets of those respective governments and are meant to cover all residents or citizens for all relevant government programs. Yet these claims of universal coverage are not reflected in the databases’ implementation. In practice, communities most reliant on state support and some historically marginalized groups bear a vastly disproportionate burden of database errors and tangible harms, such as exclusion from essential services and government benefits.8. See, e.g., Sadiq Naqvi, In Its Current Form, Aadhaar Is Very Coercive and Invasive: Jean Dreze, Catchnews (Aug. 5, 2017, 7:51 PM), http://www.catchnews.com/india-news/in-its-current-form-aadhaar-is-very-coercive-and-invasive-jean-dreze-76224.html, [https://perma.cc/3BWR-L3Q4]

(“One major problem is the exclusion of a significant minority of people for whom the system does not work. Even in Ranchi district, where the system has been in place for a whole year, more than 10% of cardholders are still unable to buy their monthly rations . . . . [I]t translates into something like 2.5 million people in Jharkhand being deprived of their food rations.”);

Ctr. for Hum. Rts. and Glob. Just., Initiative for Soc. and Econ. Rts., & Unwanted Witness, Chased Away and Left to Die, 9 (2021)

(“This research confirms that Uganda’s national ID has become an important source of exclusion for the poorest and most marginalized . . . . Based on the government’s own data and other official sources, the reliability of which we cannot guarantee, we have calculated that anywhere between 23% and 33% of Uganda’s adult population has not yet received a National Identity Card (NIC).”). Exclusion from these databases also facilitates stereotyping. This results because government narratives about the purpose of these systems suggest that those excluded are non-citizens, security risks, fraudsters, or members of other suspect categories, apparently justifying punitive outcomes of exclusion.9. See, e.g., Ctr. for Hum. Rts. and Glob. Just., Initiative for Soc. and Econ. Rts., & Unwanted Witness, supra note 8 (“Because Ndaga Muntu was primarily designed to be a national security system and not a social development program, it is perhaps not surprising that one major effect of the national ID system has been to exclude those who are considered non-Ugandans, security risks, or criminals.”); Davis Langat, Huduma Namba to Boost Security, Kenya News Agency (Dec. 18, 2020), https://www.kenyanews.go.ke/huduma-namba-to-boost-security/ [https://perma.cc/ENZ2-MPUG] (describing how government officials claimed Kenya’s Huduma Namba ID database would be a crime fighting tool used to boost public security); Jean Dreze, Reetika Khera, & Anmol Somanchi, Balancing Corruption and Exclusion: A Rejoinder, Ideas for India, (Sept. 28, 2020) https://www.ideasforindia.in/topics/poverty-inequality/balancing-corruption-and-exclusion-a-rejoinder.html [https://perma.cc/TT2D-JCHM] (highlighting how identity fraud by individuals in the welfare delivery systems was a primary justification for India’s Aadhaar database despite scant evidence that such fraud was a “serious problem”).

These databases have not received the heightened public concern, institutional priority and funding,10There are multiple legislative and other institutional efforts focused on AI. See, e.g., Advancing Artificial Intelligence Research Act of 2020, S.3891, 116th Cong. (2020) (proposing a national program to study AI and promote AI research); FUTURE of Artificial Intelligence Act of 2020, H.R.7559, 116th Cong. (2020) (proposing an advisory committee to advise president on AI issues); William M. (Mac) Thornberry National Defense Authorization Act for Fiscal Year 2021, H.R. 6395, 116th Cong. (2020) (passing some parts of the previously cited bills through omnibus legislation); Intn’l Telecomm. Union (ITU), United Nations Activities on Artificial Intelligence (2019) (identifying frameworks for trustworthy and inclusive AI systems as a key priority across UN agencies). There are also a range of philanthropic and educational organizations dedicating resources to assessing the social impacts of AI’ technologies. See, e.g., Press Release, Knight Found., The Ethics and Governance of Artificial Intelligence Fund Commits .6 Million to Organizations That Bolster Civil Society Efforts Around the World (July 10, 2017), https://knightexaminedfoundation.org/press/releases/the-ethics-and-governance-of-artificial-intelligence-fund-commits-7-6-million-to-organizations-that-bolster-civil-society-efforts-around-the-world/ [https://perma.cc/X5AE-QYYF]; Stanford Univ. Ctr. for Human-Centered A.I., https://hai.stanford.edu/ [https://perma.cc/MVF5-GJHL] (last visited May 8, 2022); Press Release, Univ. of Oxford, University Announces Unprecedented Investment in the Humanities (June 19, 2019), https://www.ox.ac.uk/news/2019-06-19-university-announces-unprecedented-investment-humanities [https://perma.cc/3AKJ-NVN7]; Grants Database, Alfred P. Sloan Found., https://sloan.org/grants-database [https://perma.cc/VBP8-TJ5X] (last accessed Apr. 6, 2022) (showing fourteen grants with the keyword “artificial intelligence”). or policy reform-based research11. See, e.g., Karen Yeung & Martin Lodge, Algorithmic Regulation: An Introduction, in Algorithmic Regulation 2–3 (Karen Yeung & Martin Lodge eds., 2019); Ada Lovelace Inst. & DataKind U.K., Examining the Blackbox: Tools for Assessing Algorithmic Systems (2020) https://www.adalovelaceinstitute.org/wp-content/uploads/2020/04/Ada-Lovelace-Institute-DataKind-uk-Examining-the-Black-Box-Report-2020.pdf [https://perma.cc/GCY8-D9UK]; David Freeman Engstrom & Daniel E. Ho, Algorithmic Accountability in the Administrative State, 37 Yale J. Regul. 800 (2020); Deirdre K. Mulligan & Kenneth A. Bamberger, Procurement as Policy: Administrative Process for Machine Learning, 34 Berkeley Tech. L. J. 773 (2019). directed at artificial intelligence (AI) or algorithmic/automated decision-making systems (ADS). For example, when the Chicago Police Department announced that it would no longer use its controversial predictive policing program in response to public criticism, it proudly announced plans to revamp its heavily criticized gang database to minimal outcry.12Annie Sweeney and John Bryne, Chicago Police Announce New Gang Database as Leaders Hope to Answer Questions of Accuracy and Fairness, Chi. Trib. (Feb. 26, 2020, 4:29 PM), https://www.chicagotribune.com/news/breaking/ct-chicago-police-gang-database-overhaul-react-20200226-gisz55rytzbsdkyy4kmbb4jrou-story.html [https://perma.cc/8XC5-L3WD]; Jeremy Gorner & Annie Sweeney, For Years Chicago Police Rated the Risk of Tens of Thousands Being Caught Up in Violence. That Controversial Effort Has Quietly Been Ended, Chi. Trib. (Jan. 24, 2020, 8:55 PM) https://www.chicagotribune.com/news/criminal-justice/ct-chicago-police-strategic-subject-list-ended-20200125-spn4kjmrxrh4tmktdjckhtox4i-story.html [https://perma.cc/4RDH-ARMP]; Chicagoans for an End to the Gang Database v. City of Chicago, MacArthur Just. Ctr. (last accessed May 7, 2022), https://www.macarthurjustice.org/case/chicagoans-for-an-end-to-the-gang-database/ (criticizing the gang database for lacking guidelines when adding people to the database); Rashida Richardson & Amba Kak, It’s Time for a Reckoning About This Foundational Piece of Police Technology, Slate (Sept. 11, 2020) https://slate.com/technology/2020/09/its-time-for-a-reckoning-about-criminal-intelligence-databases.html [https://perma.cc/NXA7-ZEUV]; see also Joseph M. Ferguson & Deborah Witzburg, City of Chi., Off. of Inspector Gen., Follow-Up Inquiry On The Chicago Police Department’s “Gang Database” 29 (2021) (“CPD has consistently maintained that its collection of gang information is critical to its crime fighting strategy but has not yet clearly articulated the specific strategic value of the data to be collected in the planned CEIS.”). Meanwhile, arguably all of the most controversial technology projects across the Global South in the last decade have been large-scale database projects, which receive relatively little media attention and research funding.13. See Payal Arora, The Bottom of the Data Pyramid: Big Data and the Global South, 10 Int’l J. of Commc’n 1681 (2016) (arguing that database projects in the Global South have been neglected in critical big data discourse despite their profound impacts on surveillance, privacy, and equity). Instead, in popular and policy discourse, databases are characterized as foundational and passive raw material that enables the creation of more advanced algorithmic systems that can sort, prioritize, predict, and so on.14For accounts that describe databases as enabling more sophisticated technologies, see generally Woodrow Hartzog & Evan Selinger, I See You: The Databases That Facial-Recognition Apps Need to Survive, Atlantic (Jan. 23, 2014), https://www.theatlantic.com/technology/archive/2014/01/i-see-you-the-databases-that-facial-recognition-apps-need-to-survive/283294/ [https://perma.cc/WE8F-82WQ]; Kevin Driscoll, From Punched Cards to “Big Data”: A Social History of Database Populism, 1 commc’n +1, 1, 2 (2012) (“Implicit in this metaphor is a database—or, more likely, a network of databases—from which the engine (code) draws its fuel (data.)”); Martin Lodge & Andrea Mennicken, Reflecting on Public Service Regulation by Algorithm, in Algorithmic Regulation 178, 185 (Karen Yeung & Martin Lodge eds., 2019) (“[T]he use of different databases built from tax returns, complaints data, social media commentary, and such like—offers the opportunity to move away from a reliance on predefined performance metrics towards bringing together different types of data.”). Because AI and ADS are viewed as more complex technologies, databases are invariably positioned by many scholars as necessary for the creation and maintenance of, but “subordinate” to, these newer systems.15Driscoll, supra note 14, at 2

(“In the emerging scholarship concerning the role of algorithms in online communication, databases are often implicated but rarely of principle concern. This subordinate position may be due to the ambiguous relationship between algorithm and database. Whereas an algorithm, implemented in running code, is self-evidently active, a database appears to serve a largely passive role as the storehouse of information.”). This is compounded by a lack of definitional clarity in public discourse around what does or does not “count” as AI, given vague and evolving technical thresholds.16P.M. Krafft, Meg Young, Michael Katell, Karen Huang & Ghislain Bugingo, Defining AI in Policy Versus Practice, arXiv cs.CY, Dec. 2019, https://arxiv.org/pdf/1912.11095.pdf [https://perma.cc/RH4E-MND2]); Ian Bogost, ‘Artificial Intelligence’ Has Become Meaningless, Atlantic (Mar. 4, 2017), https://www.theatlantic.com/technology/archive/2017/03/what-is-artificial-intelligence/518547/ [https://perma.cc/T65B-6WEG]. This definitional ambiguity has policy implications as well, where the meanings ascribed to the terms AI or ADS can determine the scope of any regulatory effort.17Rashida Richardson, Defining and Demystifying Automated Decision Systems, 81 Md. L. Rev. 1, 3–9 (s2022).

Recent policy discourse, now commonly referred to under the rubric of “algorithmic accountability,”18. See Yeung & Lodge, supra note 11, at 9–10; see also Ada Lovelace Inst. & DataKind U.K., supra note 11; Engstrom & Ho, supra note 11; Mulligan & Bamberger, supra note 11. has drawn considerable attention to how algorithmic systems serve to entrench or exacerbate systemic and historical discrimination against marginalized groups. While this emphasis on a deeper and contextual understanding of social harms is welcome, emergent policy frameworks for evaluating these systems, like Algorithmic Impact Assessments (AIAs) and algorithmic audits, are limited in their scope of review and analysis; these proposals still struggle to unearth the complete spectrum of structural technological inequities and tacit modes of discipline, control, and punishment.19. See generally Jacob Metcalf, Emanuel Moss, Elizabeth Watkins, Ranjit Singh & Madeleine Clare Elish, Algorithmic Impact Assessments and Accountability: The Co-Construction of Impacts, in FAccT ‘21: Proceedings of the 2021 ACM Conf. on Fairness, Accountability, and Transparency 735 (2021) (exploring limitations of impact assessments in algorithmic and non-algorithmic domains); Rashida Richardson, Racial Segregation and the Data-Driven Society: How Our Failure to Reckon with Root Causes Perpetuates Separate and Unequal Realities, 36 Berkeley Tech. L. J., 101, 126–34 (forthcoming 2022) (demonstrating how evaluations of algorithmic systems in the wild overlook relevant historical and social contexts that affect algorithm performance); Alfred Ng, Can Auditing Eliminate Bias from Algorithms?, Markup (Feb. 23 2021) https://themarkup.org/ask-the-markup/2021/02/23/can-auditing-eliminate-bias-from-algorithms [https://perma.cc/ANS2-BSMB] (highlighting how bias audits were mischaracterized by companies, which brings their use into question); Ada Lovelace Inst. , Algorithmic Accountability for the Public Sector: Learning From the First Wave of Policy Implementation 21–28 (2021), https://www.opengovpartnership.org/wp-content/uploads/2021/08/algorithmic-accountability-public-sector.pdf [https://perma.cc/46SV-Y2KS] (highlighting real-world use cases of algorithmic impact assessments and audits, and discussing the limitations and assumptions of these frameworks).

Responding to this combination of definitional ambiguity for policy intervention and the lack of a systematic way to evaluate harms, this Article proposes “Suspect Development Systems” (SDS) as both a definitional category and framework for analysis. SDS can be defined as (1) information technologies used by government and private actors, (2) to manage vague or often immeasurable social risks20Social risk is a normative concept. Because “institutions are not organized around a

single, cohesive notion of order,” there is no universal definition of social risks. Instead, institutions have “unique definitions of risk” and logics for managing or dealing with risks. See, e.g., Richard V. Ericson & Kevin D. Haggerty, Policing the Risk Society 43 (1997); see also, Glossary, in Criminalization, Representation, Regulation: Thinking Differently About Crime 443 (Deborah Brock, Amanda Glasbeek & Carmela Murdocca eds., 2014) (defining risk as “[a] particular discourse that has emerged alongside neoliberalsm through which events like crime are imagined.”). based on presumed or real social conditions (e.g., violence, corruption, substance abuse), (3) that subject targeted individuals or groups to greater suspicion, differential treatment, and punitive and exclusionary outcomes.

We conceptualize SDS as a normative category that acknowledges how technologies like AI, ADS, and databases amplify structural inequities and the modes through which they discipline and control individuals and groups.21For a Foucauldian analysis of “disciplinary power” and “population management power,” see Dean Spade, Normal Life: Administrative Violence, Critical Trans Politics, and the Limits of Law 52–72 (2015)

(“These programs operate through purportedly neutral criteria aimed at distributing health and security and ensuring order. They operate in the name of promoting, protecting, and enhancing the life of the national population and, by doing so, produce clear ideas about the characteristics of who the national population is and which ‘societal others’ should be characterized as ‘drains’ or ‘threats’ to that population.”). Systems described and understood as “databases” are by no means the only kinds of SDS. Indeed, more recent ADS tools like predictive policing are clear examples of SDS and would benefit from the multi-pronged analysis set forth in this Article. Overall, SDS offers a broader framework within which to build an advocacy agenda that is inclusive of this diverse range of systems, rather than focusing only on the most recent or egregious examples of information technologies. However, in this Article, we focus predominantly on databases as they have received relatively less attention than AI or ADS and would especially benefit from strategic reframing. This Article is in conversation with criminology and surveillance studies scholarship that illuminates the reasons why databases might have proliferated as a key technique of penal governance within the criminal justice system and beyond.22. See Katja Franko Aas, From Narrative to Database: Technological Change and Penal Culture, 6 Punishment & Soc’y 379 (2004); Michaelis Lianos & Mary Douglas, Dangerization and the End of Deviance: The Institutional Environment, 40 Brit. J. of Criminology, 261 (2000); Toshimaru Ogura, Electronic Government and Surveillance-Oriented Society, in Theorizing Surveillance: The Panopticon and Beyond (David Lyon ed., 2006); Gary T. Marx, Undercover: Police Surveillance in America, 208-29 (1988). For an analysis of the impact of databases in social work, see Nigel Parton, Changes in the Form of Knowledge in Social Work: From the ‘Social’ to the ‘Informational’?, 38 Brit. J. of Soc. Work 253–69 (2008). This work explores how the decontextualized and “byte-like”23 . Scott Lash, Critique of Information 2 (2002). mode of managing information within the seemingly objective structures of the computerized database has enabled the ground-level state apparatus to be more detached and unaccountable for the consequences for these decisions. This veneer of objectivity and routineness associated with databases contributes to the lack of public and regulatory scrutiny when they are introduced.

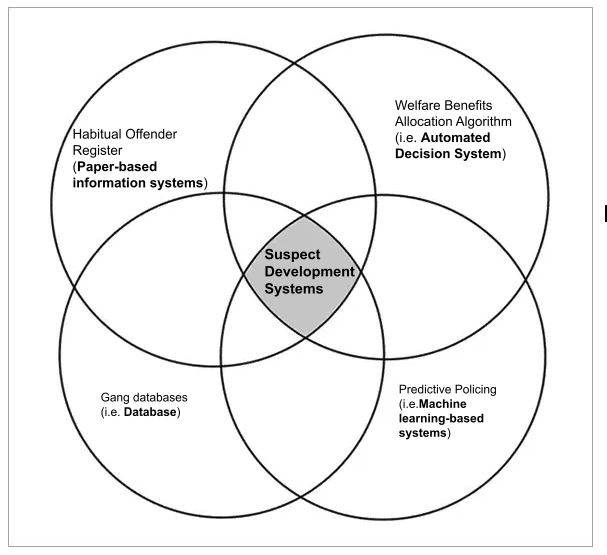

This visual illustrates that SDS can be applied to a broad range of technological systems, including systems that are identified in popular and policy discourse as Automated Decision Systems (ADS), databases, machine-learning based systems, or paper-based information systems. SDS provides a normative categorization that deliberately de-centers the technological form of the system, instead calling attention to its pernicious social impacts of creating suspect categories of people. While the wealth of examples in this Article demonstrates the ubiquity of SDS across social domains, the visual clarifies that not all algorithmic systems or databases will be a type of SDS. For example, driver’s license databases, school assignment algorithms, or price-discriminating machine-learning-based algorithms on e-commerce platforms do not fit our definition of SDS, even as they might attract other forms of critical inquiry.

This visual illustrates that SDS can be applied to a broad range of technological systems, including systems that are identified in popular and policy discourse as Automated Decision Systems (ADS), databases, machine-learning based systems, or paper-based information systems. SDS provides a normative categorization that deliberately de-centers the technological form of the system, instead calling attention to its pernicious social impacts of creating suspect categories of people. While the wealth of examples in this Article demonstrates the ubiquity of SDS across social domains, the visual clarifies that not all algorithmic systems or databases will be a type of SDS. For example, driver’s license databases, school assignment algorithms, or price-discriminating machine-learning-based algorithms on e-commerce platforms do not fit our definition of SDS, even as they might attract other forms of critical inquiry.

Criminal intelligence databases, which have been used by police agencies for over a century to profile and target based on the risk of criminality, are paradigmatic examples of SDS.24. See David L. Carter, U.S. Dept. of Just., Law Enforcement Intelligence: A Guide for State, Local, and Tribal Law Enforcement Agencies 31–49 (2d ed. 2009) (detailing the history of U.S. law enforcement intelligence practices including the creation and use of criminal intelligence databases). “Criminal intelligence databases are populated with information about people who should be watched or monitored because they might have committed a crime or might commit a future crime.”25James B. Jacobs, The Eternal Criminal Record 15 (2015) Notable examples of criminal intelligence databases include sex offender registries, prescription drug monitoring programs, London’s Crimint, and the FBI’s National Crime Information Center, which hosts the agency’s controversial Terrorist Watchlist and the Violent Person file. In this Article, we examine different variants of such databases in the U.S., the U.K., and India. Despite different socio-political contexts and institutional histories, these databases share a range of common features, conditions, and consequences.

Modern gang databases in the U.S. have existed since at least the late 1980s26Stacey Leyton, The New Blacklists: The Threat to Civil Liberties Posed by Gang Databases, in Crime Control and Social Justice: The Delicate Balance 109, 111 (Darnell F. Hawkins, Samuel L. Myers, Jr. & Randolph N. Stone, eds., 2003). and continue to evolve and proliferate as crime-fighting tools alongside newer modalities, such as predictive policing. Gang databases exist as both centralized and decentralized information systems primarily used by criminal justice actors and institutions to accumulate, analyze, and disseminate information about gangs and gang members for a variety of interests and priorities.27James B. Jacobs, Gang Databases: Context and Questions, 8 Criminology & Pub. Pol’y 705, 705–08 (2009). These databases are compiled and used by various criminal justice actors and institutions based on the belief that they function as a “force” or institutional multiplier increasing the overall efficiency, speed, and performance of all agencies without having to increase staffing or expend additional funds.28. See James Lingerfelt, Technology as a Force Multiplier, in Technology for Community Policing Conference Report 29 (1997). Gang databases exist to make intelligence and investigative information accessible to various government actors and institutions, though the needs and rationales for use vary.29. See Leyton, supra note 6, at 109–12; Jacobs, supra note 27, at 705–07, Joseph M. Ferguson, City of Chi. Off. of Inspector Gen., Review of the Chicago Police Department’s “Gang Database” 25–31 (2019). Gang databases have been used to secure mass arrests and indictments, but since such actions have disproportionately included Black and Latinx youth,30. See, e.g., Citizens for Juv. Just., We are the Prey: Racial Profiling of Youth in New Bedford, 20–22 (2021); K. Babe Howell, Gang Policing: The Post Stop-and-Frisk Justification for Profile-Based Policing, 5 U. Denv. Crim. L. Rev. 1, 16–17 (2015). these databasing efforts have also been the source of litigation31. See, e.g., Complaint, Chicagoans for an End to the Gang Database v. City of Chicago, No. 18-cv-4242 (N.D. Ill. June 19, 2018) (voluntarily dismissed Sept. 2, 2020) https://www.macarthurjustice.org/wp-content/uploads/2018/06/cpd_gang_database_class_action_complaint.pdf [https://perma.cc/PQ7E-HK8N]; Steph Machado, Community Group Files Suit Over Providence ‘Gang Database’, WPRI (July 23, 2019, 9:59 PM), https://www.wpri.com/news/local-news/providence/community-group-files-suit-over-providence-gang-database/ [https://perma.cc/LLQ2-TAVY]. and highly critical public reports.32. See, e,g,, Ferguson & Witzburg, supra note 12.

In the U.K., while intelligence files on gangs and gang members have been maintained for decades, the early 2000s saw the computerization of gang databases in large cities. In this Article, we focus on the Gangs Violence Matrix (“Gangs Matrix”), a database of purported gang members launched in 2012 as part of the U.K. Government’s “war on gangs.” The Gangs Matrix is used as a risk management tool to assess and rank suspected gang members deemed most likely to commit a violent crime and to inform local police strategies to suppress violent crime.33James A. Densley & David C. Pyrooz, The Matrix in Context: Taking Stock of Police Gang Databases in London and Beyond, 20 Youth Just. 11, 17–18 (2020); Amnesty Int’l, Trapped in the Matrix: Secrecy, Stigma, and Bias in the Met’s Gangs Database 2–3 (2018) https://www.amnesty.org.uk/files/reports/Trapped%20in%20the%20Matrix%20Amnesty%20report.pdf [https://perma.cc/QJE8-JUGH]. According to recent accounts, at any given point, there are an estimated 3,000–4,000 individuals listed in the Gangs Matrix.34Densley & Pyrooz, supra note 33, at 11; Amnesty Int’l, supra note 33, at 15. The purpose of this databasing effort is ostensibly to “audit” the gang landscape of cities, target individuals with heightened surveillance, as well as deterrence messaging or “nudges” to leave gang life.35. See Amnesty Int’l, supra note 33, at 2–4, 20–22; Gang Violence Matrix, Metro. Police, https://www.met.police.uk/police-forces/metropolitan-police/areas/about-us/about-the-met/gangs-violence-matrix/ [https://perma.cc/GMS8-KBCQ] (last visited May 7, 2022).

In India, there are a range of police databases with records of individuals who are believed to be deserving of heightened surveillance on account of their perceived dangerousness and likelihood of committing future crimes.36. See Mrinal Satish, “Bad Characters, History Sheeters, Budding Goondas and Rowdies”: Police Surveillance Files and Intelligence Databases in India, 23 Nat’l L. Sch. India Rev. 133 (2011). These databases have their roots in colonial legislation that targeted specific communities that were designated as “criminal tribes.”37The Criminal Tribes Act of 1871 led to the branding of entire Indian communities as “criminal tribes,” followed by record-keeping of their details and physical movements. These requirements imposed severe restrictions on movement, routine physical surveillance, and “limited…access to legal redress.” See Mark Brown, Postcolonial Penality: Liberty and Repression in the Shadow of Independence, India c. 1947, 21 Theoretical Criminology 186, 186 (2017). Other accounts argue that notions of group criminality associated with these tribes in fact pre-dates colonial India as a fall-out of the Hindu caste system, and that the introduction of the CTA simply systematized this discrimination and made these groups vulnerable to the constant threat of surveillance and violence at the hands of state actors. See, e.g., Shivangi Narayanan, Guilty Until Proven Guilty: Policing Caste Through Preventive Policing Registers in India, 5 J. Extreme Anthropology 111 (2021); Mukul Kumar, Relationship of Caste and Crime in Colonial India: A Discourse Analysis, 39 Econ. & Pol. Wkly. 1078 (2004); Anastasia Piliavsky, The “Criminal Tribe” in India Before the British, 57 Compar. Stud. in Soc’y & Hist. 373 (2015). This policing approach towards marginalized communities continues, in part through the operation of surveillance databases.38Satish, supra note 36, at 135. The current material form of these databases39For an explanation of the terms “surveillance databases” and “surveillance registers” to describe these record keeping systems, see Mrinal Satish, “Bad Characters, History Sheeters, Budding Goondas and Rowdies”: Police Surveillance Files and Intelligence Databases in India, 23 Nat’l L. Sch. India Rev. 133 (2011). continues to be paper records stored in official files called “registers,”40For a listing of different categories of surveillance registers, see Santana Khanikar, State, Violence, and Legitimacy in India, 41 (2018). but there are ongoing efforts to digitize these records.41Ameya Bokil, Avaneendra Khare, Nikita Sonavane, Srujana Bej, & Vaishali Janarthanan, Settled Habits, New Tricks: Casteist Policing Meets Big Tech in India, TNI (May 2021), https://longreads.tni.org/stateofpower/settled-habits-new-tricks-casteist-policing-meets-big-tech-in-india [https://perma.cc/3CKG-7B2B]. This is part of a broader institutional push for digitization of government functions in low and middle-income countries as a metric of economic development. There are multiple kinds of surveillance databases maintained by each police station that cover suspect individuals residing in that particular precinct. While the official and colloquial names for such databases and the criteria for inclusion vary from state to state, common categories include the “history sheet,” “bad character” registers, and “rowdy” registers.42Satish, supra note 36, at 135, 140–41, 146. Common amongst these databases is their inclusion of individuals who lack existing criminal records on the grounds that police believe they are likely to commit crimes or “disturb the peace.” Being included in these databases triggers a range of consequences like being subjected to heightened physical surveillance (e.g., regular home visits), increased chances of arrest, and unfavorable bail and sentencing decisions.43. Id. at 133–34.

These databases can all be helpfully understood as SDS in the way that they manage vague and often immeasurable social risks, subjecting targeted individuals to greater suspicion, differential treatment, and punitive and exclusionary outcomes. While they present as bureaucratic systems of record-keeping and classification, we explore the myriad forms of disciplinary power they exert over marginalized and historically stigmatized groups. The bureaucratic patina of these systems can conceal the violent nature of this form of control, given the looming threat of scrutiny and brutality that often accompanies their use.44On the violent character of bureaucratic systems see Spade, supra note 21, at 22–29. Analyses of databases, like SDS, should be differentiated from the literature on “automated suspicion algorithms”45. See Michael L. Rich, Machine Learning, Automated Suspicion Algorithms, and the Fourth Amendment, 164 U. Pa. L. Rev. 871 (2016). or “big data blacklists.”46Margaret Hu, Big Data Blacklisting, 67 Fla. L. Rev. 1735 (2016). The latter provides legal frameworks to analyze the use of automated statistical tools that predict and identify individuals deserving of additional scrutiny and other restrictions on account of the social risk they present. Instead of focusing on technical tools, our framework of SDS, shaped by case studies on criminal intelligence databases, centers on the grounded social, political, and economic contexts, organizational practices and technical features that structure databases. In many of these systems, the decision of who is “suspicious” is not solely determined by statistical or other automated tools, but instead by government officials who are in turn influenced by organizational, legal, and social practices. In taking this approach, we hope to address not just emergent and advanced forms of government databases, but also provide a category that is broad enough to encompass the impact of so-called ‘legacy’ or manual systems that continue to operate alongside more recent “database” modes.

The SDS category and framework can help create a common discourse around systems that cut across spheres of governance and bridges geographical and temporal divides. Similar to the creation and maintenance of “suspect” categories of people like the habitual offender or the gang member, racialized tropes coming out of political discourse, like “the welfare-dependent mother” or “the illegal immigrant” are replicated in database use and in other sectors of governance and the private sector as well.47Philip Alston, Report of the Special Rapporteur on Extreme Poverty & Human Rights 10, U.N. Doc. A/74/493, (Oct. 11, 2019) (noting that conservative politicians have historically employed

tropes to discredit inclusive welfare policy); U.S. Fed. Trade Comm’n, Data Brokers: A Call for Transparency and Accountability 29, C-3 (2014) (describing how negative stereotypes and tropes can be replicated in consumer profiles created and shared by data brokers). The SDS framework illuminates the connections between recent moves towards digitizing welfare databases in the U.K.;48 . Amos Toh, Human Rts. Watch, Automated Hardship: How the Tech-Driven Overhaul of the U.K.’s Social Security System Worsens Poverty (2020). the new Argentinian database that facilitates targeting of minors that are “alleged offenders”;49Karen Hao, Live Facial Recognition is Tracking Kids Suspected of Being Criminals, MIT Tech. Rev. (Oct. 9. 2020), https://www.technologyreview.com/2020/10/09/1009992/live-facial-recognition-is-tracking-kids-suspected-of-crime/ [https://perma.cc/3UZZ-SWN5]. the extensively detailed police database maintained by Chinese police agencies that is crucial to the surveillance, persecution and large-scale internment of Muslims in the Xinjiang region;50Yael Grauer, Revealed: Massive Chinese Police Database, Intercept (Jan. 29, 2020, 3:00 AM), https://theintercept.com/2021/01/29/china-uyghur-muslim-surveillance-police/ [https://perma.cc/384Y-N8MM] the proliferation of nationwide mandatory biometric ID databases in developing countries;51. See Access Now, National Digital Identity Programmes: What’s Next? 7–17 (Nov. 2019), https://www.accessnow.org/cms/assets/uploads/2019/11/Digital-Identity-Paper-Nov-2019.pdf [https://perma.cc/6YJJ-X29J] (highlighting case studies from India, Tunisia, and Estonia). and the massive citizenship databases being introduced for immigration control in the European Union, India, and the U.S.52. See Databases for Deportation, Statewatch, https://www.statewatch.org/deportation-union-rights-accountability-and-the-eu-s-push-to-increase-forced-removals/deportations-at-the-heart-of-eu-migration-policy/databases-for-deportations/ [https://perma.cc/64CY-HKCR] (explaining how “[t]he political decision to try to step up expulsions from the EU has led to the transformation of existing databases and the introduction of new ones”). See also infra Section II.C, for descriptions of databases in U.S. and India. This framework also allows us to draw connections between historical practices and modern database usage/developing “suspect” categories of people, such as linking current gang database practices in the U.K. to the British colonial strategy of criminalizing entire communities (designated “criminal tribes”)53Jasbinder S. Nijjar, Echoes of Empire: Excavating the Colonial Roots of Britain’s “War on Gangs”, 45 Soc. Just. 147, 151 (2018). and extrapolating lessons from the use of watchlists to suppress political dissent in the U.S. during the Civil Rights and anti-Vietnam war movements.54. See, e.g., Electronic Surveillance Within the United States for Foreign Intelligence Purposes: Hearing on S. 3197 Before the Subcomm. On Intel. And the Rts. Of Ams. Of the S. Select Comm. On Intel., 94th Cong. (1976); Frank Kusch, Battleground Chicago: The Police and the 1968 Democratic National Convention (2008); Jeffrey Haas, The Assassination of Fred Hampton: How the FBI and the Chicago Police Murdered a Black Panther (2010). Each of these government data projects has defined who is included and who is excluded from the vision of the nation state and has facilitated the conditions for profiling, stigmatizing, or even eliminating those who are excluded. For criminal intelligence databases, inclusion into these databases trigger these consequences, whereas, in welfare or citizenship databases, exclusion or removal from databases is used to punish or otherwise disempower individuals and groups.

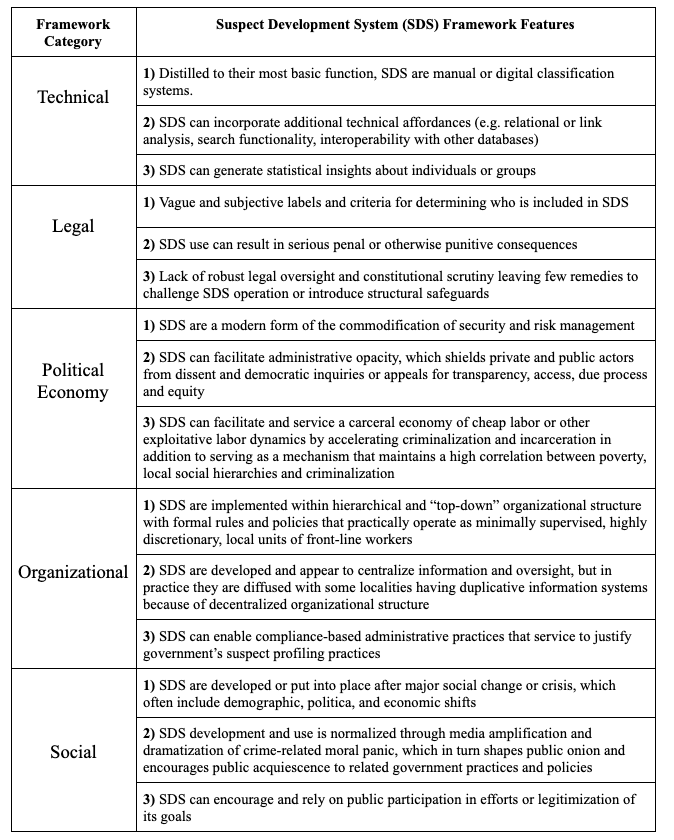

The Article is organized as follows. In Section I, through a detailed examination of the use of criminal intelligence databases in the U.S., U.K., and India, we present a framework of features that are crucial to understanding and evaluating SDS. In this Section, we explore five categories of features: technical, legal, political economy, organizational, and social. Each category distills the common features and insights observable in these SDSs, which remain underexplored in existing algorithmic accountability discourse. Section II demonstrates the value of SDS as a framework applied to contemporary government databases outside of the criminal justice system. We apply the framework analysis to other systems that could helpfully be understood as SDS: welfare system databases, universal or ID number databases, and citizenship databases. Finally, we conclude with a range of insights regarding how our analytical framework of SDS offers conceptual, legal, and strategic insights towards addressing the harms caused by these systems or doing away with them altogether where such harms cannot be remedied. SDS, as a defined term and analytical framework, provides an expanded lens with which to define and identify ADS systems by offering a systematic understanding of the socio-technical context, without which the harms of these systems cannot be addressed.

I. Suspect Development System Framework

SDSs are typically unique to the jurisdictions in which they are used because local conditions influence their development and use. However, as their use and consequences become more prevalent globally, such jurisdictional distinctions may be less salient and practical. Policymakers, advocates, and scholars are increasingly interested in identifying, evaluating, and addressing the implications of emergent technologies in systematic and concentrated ways, an approach that some say can only be achieved if there is a categorical framework for analysis.55. See, e.g., Joshua P. Meltzer, Cameron Kerry, & Alex Engler, Submission to the EC White Paper on Artificial Intelligence (AI): The Importance and Opportunities of Transatlantic Cooperation on AI (2020), https://www.brookings.edu/wp-content/uploads/2020/06/AI_White_Paper_Submission_Final.pdf [https://perma.cc/QW2R-WLK3] (emphasizing the importance of global cooperation on regulation to allow governments to maintain legal rules and values); Inst. of Elec. & Elec. Eng’rs, IEEE-USA Position Statement: Artificial Intelligence Research, Development, & Regulation 2 (2017), https://ieeeusa.org/wp-content/uploads/2017/07/FINALformattedIEEEUSAAIPS.pdf [https://perma.cc/RB8P-XXNC] (recommending that a federal interagency panel should determine how to coordinate and enforce federal AI regulation); Jennifer Kuzma, Jordan Paradise, Gurumurthy Ramachandran, Jee-Ae Kim, Adam Kokotovich & Susan M. Wolf, An Integrated Approach to Oversight Assessment for Emerging Technologies, 28 Risk Analysis 1197 (2008) (proposing an integrated oversight assessments approach for evaluating emerging technologies).

In this Section we present a framework for SDS that includes five categories of features: technical, legal, political economy, organizational, and social.56. See generally Stephen D. Mastrofski & James J. Willis, Police Organization Continuity and Change: Into the Twenty-First Century, 39 Crime & Just. 55, 79 (2010) (noting that technologies have a number of components including material, logical, and social). We use the term “features” expansively to include typical characteristics, structural conditions, consequences of SDS development, and use. These categories were developed by examining criminal intelligence databases used by law enforcement and other government actors to profile and target people considered to be a social risk for criminality in the U.S., U.K., and India.57We examined the Gangs Violence Matrix in the U.K., at least six state and local gang databases in the U.S., and a range of surveillance databases in at least five states in India. These databases have different colloquial terminologies (such as gang or habitual offender) and rhetorical accounts that convey who will be targeted and the social risks they pose to a wide variety of audiences, yet they share common or universal features and collectively provide insights that remain unexamined in legal scholarship58Most legal scholarship regarding data-driven technologies generally and the criminal justice system specifically tend to focus on specific technologies or sectoral concerns. Though there are a few notable, recent publications that seek to explore legal and social issues through a categorical framework. See, e.g., Aziz Z. Huq, Racial Equity in Algorithmic Criminal Justice, 68 Duke L.J. 1043 (2019); Vincent M. Southerland, The Intersection of Race and Algorithmic Tools in the Criminal Legal System, 80 Md. L. Rev. 487 (2021). and policy discourse. Thus, we chose to examine countries in the Global North and South that represent different stages of SDS development to explore where such commonalities emerge and how they can expand our understanding of how these seemingly disparate technologies are coaxial.

Our framework is not exhaustive. Instead, we have identified three key features in each category to demonstrate the analytical utility of each category and the framework, while leaving other features open to future development and expansion. Additionally, no category can or should be understood in isolation. The interactions within and between these framework categories influence how SDSs function, the ways they are used, and the outcomes they produce. For example, as political theorist Langdon Winner has argued, “the adoption of a given technical system actually requires the creation and maintenance of a particular set of social conditions as the operating environment of that system.”59 . Langdon Winner, The Whale and the Reactor: A Search for Limits in an Age of High Technology 32 (1986). For a discussion of SDS social features, see infra Section I.E. Similarly, structures and practices explored in these organizational categories can help explain the practical implications of SDS use, in addition to gaps or oversights in relevant legal rules and regulations reviewed in the legal category. Thus, our framework can enable robust and tactical assessments that promote a more nuanced and holistic understanding of the uses, risks, and consequences of SDS, particularly providing insight into how these technologies amplify cumulative disadvantage60Cumulative disadvantage is defined as “the ways in which a decision based on the evaluation of the groups in which an individual has been assigned by chance, or by ill-informed choice, shapes the opportunities that are available to her.” Oscar H. Gandy, Jr., Coming to Terms with Chance: Engaging Rational Discrimination and Cumulative Disadvantage 74 (2009). and structural inequities. This framework can both inform civil society advocacy and research agendas, as well as be operationalized by state and private actors in deciding whether (and how) to develop and use such systems or governing relevant spheres of influence.

A. Technical Features

A. Technical Features

The technological features of SDSs shape and structure how these systems generate information and knowledge for the entities that use them. This includes tangible characteristics of these systems such as: types of physical or digital interfaces; modes of collecting, storing, and managing information; and statistical techniques that might be incorporated into these systems. The objective here is not to create technical thresholds for what does and does not count as an SDS. Indeed, this framework seeks to de-center technical descriptors that are often used to create harmful hierarchies of analysis between more and less “advanced” systems. Through a detailed examination of criminal intelligence databases across contexts, this category highlights the range and variability in how such systems are designed, the intensity of technical tasks they afford, and the complex relationship between paper-based records and digital databases. These features are typically communicated in technical jargon or mathematical terms that cause misinterpretations outside of the disciplinary contexts in which they were coined.61. See Stephen C. Rea, A Survey of Fair and Responsible Machine Learning and Artificial Intelligence: Implications of Consumer Financial Services, 20–25 (2020) (describing semantic gaps with respect to how AI concepts are used or misinterpreted in different disciplines and contexts); Richardson, supra note 17, at 788. (explaining how the misappropriation of technical jargon from different disciplines and contexts is ill-advised and causes confusion). This jargon can also serve to mystify or obfuscate these systems in ways that prevent critique or broader public engagement.62Tressie McMillan Cottom, Where Platform Capitalism and Racial Capitalism Meet: The Sociology of Race and Racism in the Digital Society, 6 Socio. Race & Ethnicity 441, 443 (2020) (arguing that the use of “needlessly complex technical jargon” is one aspect of an obfuscation strategy used by individual and institutional actors to inhibit access to information that could reveal inherent biases in technology). For these reasons, we focus this category on relating particular technical features in terms of the functionality they afford their users and the consequences these design choices present.

First, distilled to their most basic function, SDSs include simple classification systems that can be manual or digital. Criminal intelligence databases are manual or digital classification systems that categorize individuals or groups into labels, such as habitual offenders or gang members. Along with names, these records can include a range of details about a person including photographs or other biometric information, contact information (e.g., residence, phone number), demographic information (e.g., age, gender, religion, race), identifying marks (e.g. tattoos), socioeconomic information (e.g. marital status), criminal history and membership of a particular group, and names of affiliates (either members of the same gang or informal associations with others on the list). Surveillance databases in India, for example, have a great deal of continuity with those used decades earlier during colonial rule that included the early use of visual cues like different colored ink to categorize an individual’s level of dangerousness.63Radhika Singha, Punished by Surveillance: Policing ‘Dangerousness in Colonial India, 1872–1918, 49 Mod. Asian Stud. 241, 245 (2015) (“The process . . . was one which turned the badmaash of popular discourse into a ‘red-ink ‘or’ black-ink’ badmaash, according to the colour of the ink used to categorize him in a police surveillance register.”). “Badmaash” here refers to the Hindi language colloquial term for person with bad character, or miscreant. See Alasatir Richard McClure, Violence, Sovereignty, and the Making of Colonial Criminal Law in India, 1857-1914, 6 (July 2017) (PhD dissertation, University of Cambridge) https://www.repository.cam.ac.uk./bitstream/handle/1810/268185/McClure-2017-PhD.pdf?sequence=3&isAllowed=n [https://perma.cc/Y36H-957X]. Color-coding continues to be used to highlight different degrees of risk, like in California’s gang database, CalGang, and the United Kingdom’s Gangs Matrix.64. See Leyton, supra note 26, at 111 (“The system also includes photographs . . . color coded to show associates’ gang affiliations.”); Amnesty Int’l, supra note 33, at 6–7 (“The ‘harm score’ assiigned to each individual on the matrix is labelled red, amber or green.”). Paper-based systems in India are intended to be digitized as part of a broader digital policing reform project,65. See, e.g., Delhi Police, Mission Mode Project: RFP for System Integrator (I), https://silo.tips/download/delhi-police-government-of-delhi [https://perma.cc/EA5G-8KAD] (The RFP states that the CCTNS digital policing project will include police records, including of habitual offenders, “since inception”, and that such system should be designed to “capture the details of History Sheets/dangerous/ habitual offenders.”). but evidence from the U.S. suggests that the transition from manual to digital systems is not a binary shift, but instead a messy and negotiated process given that police officers often have greater familiarity and comfort with and fidelity to paper files. For example, accounts of CalGang’s upgrades describe how many officers were reluctant to replace paper files or input these records into the database,66Cal. Dept. of Justice, Technology Acquisition Project Case Study: California Department of Justice CAL/GANG System 6 (Draft Report), (forthcoming) (on file with authors). and the Chicago Police Department’s (CPD) CLEAR system is still described as a patchwork of digitized paper files along with other more advanced computer application modules.67. See Ferguson, supra note 29, at 18–21.

Second, SDSs can incorporate additional technical affordances like relational or link analysis, search functionality, and interoperability with other databases. Beyond the basic functions of recording and categorizing individuals, gang databases across the U.S. include additional features or third-party applications that may be integrated into the web browser-like interfaces.68. See, e.g., N.Y.C. Police Dep’t, Criminal Group Database: Impact & Use Policy 8 (2021) https://www1.nyc.gov/assets/nypd/downloads/pdf/public_information/post-final/criminal-group-database-nypd-Impact-and-use-policy_4.9.21_final.pdf [https://perma.cc/TW2B-UJCU] (“The NYPD purchases Criminal Group Database associated equipment or Software as a Service (SaaS)/software from approved vendors.”). One such additional functionality of such systems is the ability to use a search tool to query the database for records of a particular individual, members of a particular gang, or entries from a particular geographic area.69. See Ferguson, supra note 29, at 18–23. These databases also typically enable inter-and intra-government information sharing capabilities which allow police and other officials to query records across databases.70. See Leyton, supra note 26, at 113; Ferguson, supra note 29, at 24–26. Notably the plans to digitize policing databases in India and Nigeria highlight search and interoperability as a primary objective. See Ministry of Home Affairs Nat’l Crime Records Bureau, Gov. of India, CCTNS Good Practices and Success Stories 107 (2018), https://ncrb.gov.in/sites/default/files/Compiled-Compendium.pdf [https://perma.cc/Q45K-W33W] (positing that the CCTNS database “is a Google type search for Police Department.”). Another functionality observable across multiple gang databases is the mapping of relationality between individuals within the database. CalGang, whose design became the template for the commercially available GangNet used widely in the U.S. and Canada, was created to be this kind of relational database accessible through web portals.71. See Leyton, supra note 26, at 111. See also, Raymond Dussault, GangNet: A New Tool in the

War on Gangs, Gov’t Tech. (Dec. 31, 1997), https://www.govtech.com/magazines/gt/GangNet-A-New-Tool-in-the.html [https://perma.cc/ZY5K-SAQF] (detailing uses of the commercially licensed GangNet system). It allows for over two hundred data fields for individuals listed in the database, and “can generate ‘link diagrams’ of gang associates out to three levels, which include photographs and are color coded to show associates’ gang affiliations.’”72. See Leyton, supra note 26, at 111. The Chicago Police Department’s system also includes modules for “link analysis,” which claim to perform social network analysis by connecting “individuals with records of crimes for which they were arrested as well as individuals with whom they were arrested.”73. Citizen and Law Enforcement Analysis and Reporting (CLEAR), Ash Ctr. for Democratic Governance and Innovation (Jan. 1, 2007) https://ash.harvard.edu/news/citizen-and-law-enforcement-analysis-and-reporting-clear [https://perma.cc/W2QN-XELY]. Ferguson, supra note 29, at 18. The information available in link analysis can then be linked with “[ballistic] evidence or other data to link individuals to crimes.”74 . Ferguson, supra note 29, at 95.

Finally, SDSs can generate statistical insights about individuals and groups. Despite being characterized as passive systems, databases include algorithmic tools that generate statistical insights about individuals and groups that are included in the database. While some gang databases in the U.S. generate summary reports with descriptive statistics on geographic maps of areas with individuals identified as “gang-involved,”75. Id. at 9, 15–19. the U.K. Gangs Matrix goes further to offer risk assessments that guide and even prioritize policing resource allocations. In the Gangs Matrix, a “harm score” is assigned to each individual, which is essentially a color-coded score (red, amber, or green) based on the “level of violence [that they] have shown.”76 . Amnesty Int’l, supra note 33, at 6 (alterations in original). The algorithm that produces the harm score relies on “police information about past arrests, convictions, and ‘intelligence related to violence/weapons access,’” social media, and other informal sources.77. Id. The Metropolitan Police, the territorial police force responsible for London’s thirty-two boroughs, have not disclosed the criteria and weights for the automated scoring algorithm they developed.78. Id. at 15. Some sources suggest that the score depends on how many crimes the person was involved during a three-year period, weighted according to the seriousness of the crime and its recency.79. Id. at 13. The harm scores are then ranked within each borough and the individuals with the top ten scores of each list are prioritized for enforcement.80. Id. at 13–14. While criminal intelligence databases are often viewed as being the foundation for more algorithmically advanced systems, the Gangs Matrix example, in particular, demonstrates the need to avoid any rigid semantic distinction between databases and algorithmic decision systems.

B. Legal Features

This category highlights common legal features and contexts that structure and potentially constrain SDSs design, use, and outcomes. These conditions are necessary for evaluating how databases are operationalized in practice, how they are viewed and understood by the public, and how they impact society.

The first SDS legal feature observable across legal frameworks applicable to criminal intelligence databases is the vague and subjective labels and criteria for determining who should be included in these databases, which both enables and conceals the biased notions of group criminality embedded in these systems. As we explore in this Section, this definitional flexibility is neither benign nor accidental. The operationalization of these legal regimes demonstrates how amorphous legal definitions have created a system ripe for the disproportionate targeting of historically marginalized social groups. Despite the veneer of individualized assessment, we explore how such labels and criteria both enable and maintain biased notions of group criminality.

Defining what constitutes a gang or gang members has been a significant challenge for law enforcement in the U.S., particularly because law enforcement definitions and practices tend to foreground the criminal activities of gangs, whereas researchers and social welfare practitioners “tend to emphasize the social and cultural aspects of gang formation and activity.”81Leyton, supra note 26, at 114; see also Mercer L. Sullivan, Maybe We Shouldn’t Study “Gangs”: Does Reification Obscure Youth Violence?, 21 J. of Contemp. Crim. Just. 170, 171 (2005) (“Youth violence takes many organizational forms. Lumping these together as ‘gang’ phenomena carries distracting baggage . . . . It can, and sometimes does, cloud our view of what we should be placing front and center, the problem of youth violence.”); Charles M. Katz, Issues in the Production and Dissemination of Gang Statistics: An Ethnographic Study of a Large Midwestern Police Gang Unit, 49 Crime & Delinq. 485, 487 (2003)

(“[P]olice do not necessarily document individuals because of their behavior but rather document individuals according to their own ideas and beliefs about gang members. . . . [T]his leads to officers documenting individuals based solely on where individuals live, with whom they associate, what they look like, or what clothes they wear.”);

Forrest Stuart, Code of the Tweet: Urban Gang Violence in the Social Media Age, 67 Soc. Probs. 191, 194 (2019) (describing how police assign residents to gang and other criminal databases based on social media activity that is misinterpreted as evidence of criminal activity). This schism can be partially attributed to law enforcement’s traditional approach to criminal profiling, which relies “on the correlation between behavioral factors and the past experience of law enforcement in discovering criminal behavior associated with those factors. Thus, profiling rests on the perceived accuracy of the profile as a predictor of criminality.”82William M. Carter, Jr., A Thirteenth Amendment Framework for Combating Racial Profiling, 39 Harv. C.R.-C.L. L. Rev. 17, 22 (2004).

Definitions are inconsistent across the country and are likely to reflect the political, social and financial pressures of any given jurisdiction.83Katz, supra note 81, at 486–89 (2003). A national study on gang-related laws found that only fifteen states have statutory definitions for gang members and five of these definitions are relatively generic.84. Id. See also Audit Div., L.A. Police Dep’t Chief of Police, AD No. 18-016, CalGang Criminal Intelligence System Audit 1, 7 (2019), http://www.lapdpolicecom.lacity.org/091019/BPC_19-0252.pdf [https://perma.cc/77SM-FUUR] (finding the most common criteria for including individuals in the CalGang System was whether they were “seen frequenting gang areas” and the second most frequent was whether they had “been seen associating with documented gang members.”). For instance, the statutory definitions have different requirements for how many individuals must participate in criminal activity to qualify as a gang—most states require three or more individuals, some require at least five individuals, and some do not specify a required number of members.85Julie Barrows & C. Ronald Huff, Gang and Public Policy: Constructing and Deconstructing Gang Databases, 8 Criminology & Pub. Pol’y 675, 683–85 (2009). Self-identification as part of a gang is the only common criteria, where no other corroboratory evidence is necessary. Research suggests this is a fraught category that is particularly vulnerable to abuse.86. See, e.g., Citizens for Juv. Just., supra note 30, at 22 (highlighting incidents where police threaten and coerce young people to self-identify as gang-involved); Barbara Bradley Hagerty, The Other Police Violence, Atlantic (Sept. 17, 2020) https://www.theatlantic.com/ideas/archive/2020/09/other-police-violence/616363/ [https://perma.cc/J2CR-3QXN] (describing how official misconduct in interrogations lead to false self-admissions that disproportionately send innocent Black men to prison); #GuiltyPleaProblem, Innocence Project, https://guiltypleaproblem.org/ [https://perma.cc/S5AZ-7DG4#stats] (“nearly 11 percent of the nation’s 362 DNA-based exonerations since 1989 involved people who pleaded guilty to serious crimes they didn’t commit. Furthermore, according to the National Registry of Exonerations, 18 percent of known exonerees pleaded guilty”) (last visited June 24, 2022). With police officers increasingly interpreting gang membership based on content posted by individuals on social media, there is a significant risk of misunderstanding cultural practices and signals, where “[w]hat may be meant as a joke or recognized as a lyric to a favorite rap song is instead interpreted by outsiders as inculpatory.”87 . Priscilla Bustamante & Babe Howell, Report on the Bronx 120 Mass “Gang” Prosecution 27 (2019), https://static1.squarespace.com/static/5caf6f4fb7c92ca13c9903e3/t/5cf914a3db738b00010598b8/1559827620344/Bronx%2B120%2BReport.pdf [https://perma.cc/35YY-CLDX]; see also Stuart, supra note 81.

Definitional ambiguity results in heightened discretion for those implementing DBS and is often justified by socially constructed notions of the groups being targeted. Unlike other forms of organized crime, such as mafias or mobs, which are hierarchical groups with strict codes of conduct that exist for the criminal enterprise, gangs are more amorphous and characterized by their fluidity in membership, geographic mobility, and differential organizational structures. Criminal activity is not necessarily the centralizing focus.88Barrows & Huff, supra note 85, at 678–79 (2009); see also Malcolm W. Klein, Cheryl L. Maxson & Jody Miller, Introduction, in The Modern Gang Reader, viii–ix (Malcom W. Klein, Cheryl L. Maxson & Jody Miller eds., 1995) (noting distinctions between street gangs, prison gangs, and criminal syndicates); Richard C. McCorkle & Terance D. Miethe, Panic: The Social Construction of the Street Gang Problem, 202–09 (2002) (problematizing the concept of gangs as coherent groups of individuals with shared goals and synthesizing research that has identified focuses other than criminality that gangs “organize” themselves around including territoriality, dress and grafitti); Judith Greene & Kevin Pranis, Just. Pol’y Inst., Gang Wars: The Failure of Enforcement Tactics and the Need for Effective Public Safety Strategies 10 (2007) (“Most experts agree that drug trafficking is a secondary interest for street gang members.”); Leyton, supra note 26, at 115 (“Most gangs are loosely structured, and many young people may join solely for safety or acceptance reasons rather than to participate in the gang’s criminal activities.”). For example, then-New York City Police Department (NYPD) Commissioner, Dermont Shea, stated in his testimony about the Department’s gang database that gangs had evolved from traditional structure and noted: “their lack of a defined structure makes it difficult to predict their activities or document their association.”89Dermot Shea, Chief of Detectives, N.Y.C. Police Dep’t., Address at New York City Council Committee on Public Safety (June 13, 2018), https://councilnyc.viebit.com/player.php?hash=cYVufTvzRw2v [https://perma.cc/73UP-TGBM]. This fluidity has, in turn, become a justification for ensuring legal definitions allow maximum discretion for police officers. In 2015, the U.K. Home Office also sought to simplify and expand the definition of a gang and gang member “to make it less prescriptive and more flexible” and the new definition stated that a gang should be defined merely “as having one or more characteristics that enable its members to be identified as a group by others.”90Home Office & Karen Bradley, Changes in Legislation Reflected in New Gang Definition, Gov.UK (June 8, 2015), https://www.gov.uk/government/news/changes-in-legislation-reflected-in-new-gang-definition. Thus, this definitional ambiguity has provided police with unfettered discretion to use scant evidence to not only label individuals as gang members but also to promote beliefs in non-existent gangs, such as the “ACAB gang” referenced in the introduction.91. See Biscobing, supra note 5.

Finally, the existence of individualized assessment criteria for who is included within a database can distract from or even disguise biased notions of group criminality embedded in these systems. The use of such criteria in India is demonstrative of this. The widely criticized colonial-era Criminal Tribes Act (that criminalized marginalized groups) was replaced with a Habitual Offenders Act in several states post-independence, a move that has been widely described as “window dressing changes of nomenclature, from criminal tribes to habitual offenders.”92Brown, supra note 37, at 198. In states that have it, the Habitual Offenders Act determines the criteria for when a history sheet record is created against an individual, which subsequently leads to their inclusion into the surveillance database.93. See Satish, supra note 36.

While these statutes do generally require at least three convictions to trigger a history sheet record, this obscures the fact that police still typically secure such convictions under colonial-era provisions often referred to as the “bad livelihood” sections, which apply to “suspicious persons” and “habitual offenders” without any requirement of a pre-existing criminal record.94. See Madhya Pradesh Police Regulations: Chapter VII (Security for Good Behaviors): Rule 839 Bad Livelihood Cases, (demonstrating that these provisions are referred to as the “bad livelihood” provisions in police regulations). Id. at 250.

(“Flexible as it was, the procedure of a bad-livelihood enquiry could erect quite a rigid frame of ‘habituality’. Someone ordered to furnish security under Section 109 or 110 was no longer simply a ‘suspected person’. He acquired a record and, for purposes of police surveillance or jail discipline, was often categorized with convicted habituals.”). Courts, too, have noted the use of these preventive provisions, specifically Sections 109 and 110 of the current Criminal Procedure Code, to arrest and harass individuals who often lack the financial resources to deposit the “good behavior” bond required under these provisions.95. See Madhya Pradesh Police Regulations: Chapter VII (Security for Good Behaviors): Rule 839 Bad Livelihood Cases. Interview by Amba Kak with Nikita Sonawane and Ameya Bokil, Attorneys, Criminal Justice & Police Accountability Project (Oct. 7, 2020) (transcript and recording on file with authors); see also, Sabari alias Sabarigiri v. Asst. Comm. of Police (2018), https://indiankanoon.org/doc/159399368/ [https://perma.cc/2SSS-XWQP] (“The Police seems to be adopting the practice of registering First Information Reports against the persons under Sections 109 and 110 of CrPC, just to open the history sheet and to justify the continuance of the name of the persons in the history sheet”). In states that do not have their own Habitual Offenders Act, the criteria for the creation of a history sheet record is stipulated via “standing orders”96. See generally, Police standing orders are rules or mandates that offer guidance to officers of police departments in India. They are issued at the state level and are administrative in nature. See Deputy Inspector General of… v. P. Amalanathan, (1966) 203 AIR (1964) (per Ramakrishnan), https://indiankanoon.org/doc/41451/ [https://perma.cc/GH66-DDTS].; B. Tilak Chandar, HC Asks Police to Review History Sheets Regularly, Hindu (Sept. 26, 2018, 8:45 PM), https://www.thehindu.com/news/cities/Madurai/hc-asks-police-to-review-history-sheets-regularly-madurai/article25050457.ece (Under Police Standing Order 746, history sheet can be opened against those involved in crime habitually). or prison manuals that are even more vague and include direct references to the repealed colonial-era regime, such as classifying individuals as “habitual criminals” based solely on whether someone has a bad livelihood (undefined) or even whether they are a member of an erstwhile criminal tribe.97Madhya Pradesh Jail Manual, 1987, Rule 411–12, http://jail.mp.gov.in/sites/default/files/Part%202_2.pdf [https://perma.cc/Q82J-V7Z8]. A recent ethnographic account of the implementation of surveillance databases in New Delhi includes a revealing quote from officers who admitted “some of the communities are still criminal, but they are no longer targeted as a group but as individuals.”98Khanikar, supra note 40, at 42.

The second legal feature of SDS is that despite these vague, flexible, and biased criteria, inclusion into a database still inevitably translates into serious penal or other punitive consequences outside the carceral system. The consequences manifest in similar ways across the jurisdictions we have examined. Police rely on these databases to allocate surveillance resources, determine which geographical areas or homes to target, and which persons of interest to investigate and arrest, leading to the chronic over-policing of those in the databases.99For the U.S. see, for example, Ben Popper, How the NYPD is Using Social Media to Put Harlem Teens Behind Bars, Verge, (Dec. 10, 2014), https://www.theverge.com/2014/12/10/7341077/nypd-harlem-crews-social-media-rikers-prison [https://perma.cc/HR8P-2FD] (describing the case of Jelani Henry, a young black man from Harlem that was incarcerated at Rikers Island for 19 months based on the NYPD’s use of a gang database and social media monitoring to label him as a criminal affiliate). For India, see for example, Satish, supra note 36, at 133 (describing how surveillance databases play a critical role in justifying the rounding up and detaining of “anti-social elements” by

the police before festivals, elections, and other political events). For the U.K. see, for example, StopWatch, Being Matrixed: The (Over)policing of Gang Suspects in London (August 2018) https://www.stop-watch.org/uploads/documents/Being_Matrixed.pdf [https://perma.cc/C2EY-JG9E] (describing how police officers continually patrol the same postcodes and routinely stop and search the same individuals on the Gang Matrix, and as a result, they are more likely to get picked up and charged for minor offences). These databases or the information within are also shared with other actors in the criminal justice system, which can exacerbate collateral consequences of the criminal justice system.100For example, a 2021 U.S. Immigration and Customs Enforcement Memorandum on federal immigration enforcement priorities, lists individuals deemed a “threat to public safety”, which includes individuals with a gang conviction or “who intentionally participated in an organized criminal gang”, as a priority for deportation. U.S. Immigr. & Customs Enf’t, U.S. Dept. of Homeland Sec., Interim Guidance: Civil Immigration Enforcement and Removal Priorities (Feb. 18, 2021), https://www.ice.gov/doclib/news/releases/2021/021821_civil-immigration-enforcement_interim-guidance.pdf. [https://perma.cc/UT2L-P6XH]. The directive is written in a way that can implicate individuals included on a gang database, and advocates warned that reliance on this criterion can exacerbate the discriminatory impact of police databasing efforts on Black, Latinx, and immigrant communities. Letter from GANGS Coalition, to Alejandro Mayorkas, Sec’y, Dept. of Homeland

Sec. (Apr. 1, 2021) https://gangscoalition.medium.com/coalition-letter-on-interim-ice-guidance-7275abadfb67 [https://perma.cc/YA5B-D3C9]. Prison and jail officials rely on databases to make appropriate classifications and other decisions for security and institutional order.101For the U.S. see, for example, Jacobs, supra note 27, 705–07 (2009); Leyton, supra note 26 at 122. For the U.K. see, for example, Amnesty Int’l, supra note 33, at 6. For India see, for example, Telephone Interview by Karishma Maria, Research Assistant to Amba Kak, AI Now Institute at New York University with Jeeyika Shiv, Associate Director, Fair Trial Initiative Project39A (Sept. 12, 2020) (transcript on file with author) (describing how there are often separate barracks for “history sheet cases” and it is more difficult to obtain permission for visitation). Prosecutors rely on these databases to inform and craft criminal charges and plea bargains.102For the U.S., see, for example, Jacobs, supra note 27, at 705–07; Jeffrey Lane, The Digital Streets 128–149 (2019); Leyton, supra note 26, at 122; For the U.K. see, for example, Amnesty Int’l, supra note 33, at 8 (“the Gangs Matrix features information provided by the police to the Crown Prosecution Service (CPS) at the point when the CPS makes charging decisions.”). Judges rely on them to inform bail and sentencing decisions.103For the U.S. see, for example, Jacobs, supra note 27, at 705–07. For India see, for example, Satish, supra note 36 at 147.